You Can be a Musician by Learning Web Audio API

According to the specification![]() Web Audio API Standard, the Web Audio API is a high-level JavaScript API for processing and synthesizing audio in web applications. In simple terms, you can manipulate sound, including but not limiting to generating, processing, synthesizing sound in the web pages. This definitely broadens the way that you can design for web applications.

Web Audio API Standard, the Web Audio API is a high-level JavaScript API for processing and synthesizing audio in web applications. In simple terms, you can manipulate sound, including but not limiting to generating, processing, synthesizing sound in the web pages. This definitely broadens the way that you can design for web applications.

As it is a fairely complex API and contains a lot of functionalities that can not be explained by a single short post, I am here just give a simple example of how to generate and manipulate sound.

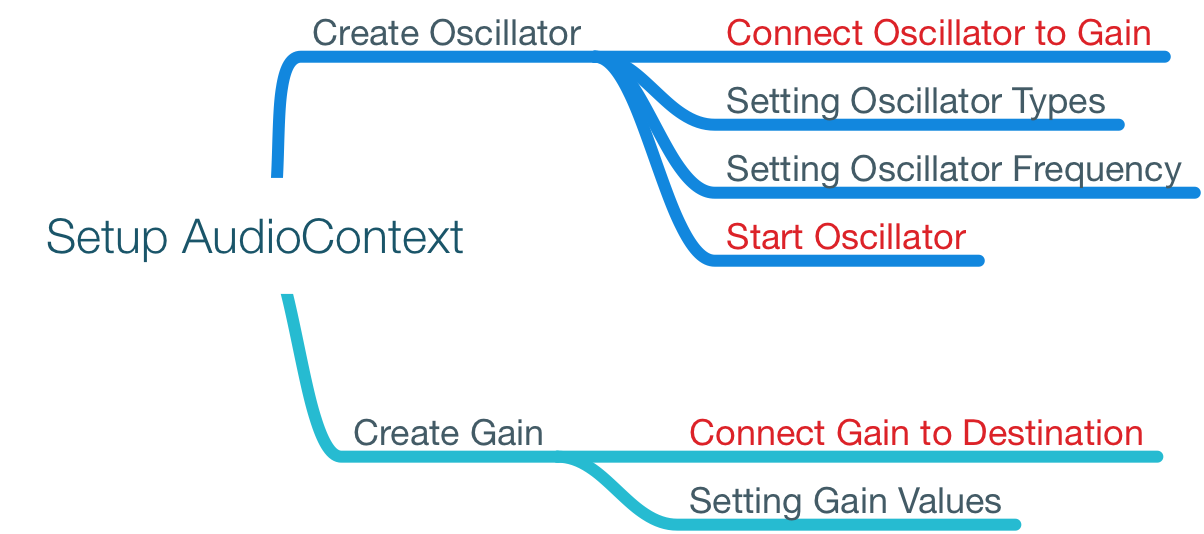

The diagram illustration of sound manipulation is shown in the following figure and the detail steps are shown as follows![]()

Illustration of simple sound generation using Web Audio API

-

Creating AudioContext:

Creating AudioContext:

AudioContext is one of many window’s objects. The following codes and the codes in later sections of this post are tested in Chrome only.

var ctx = new AudioContext();-

Creating Oscillator:

Creating Oscillator:

Like in the physical world, sound comes from oscillation. So oscillator is the basic source for generating sound.

var oscillator = ctx.createOscillator();-

Creating Gain:

Creating Gain:

In order to control the volume of the sound, we need to attach a gain to the oscillator.

var gain = ctx.createGain();-

Setting up the audio manipulation chain:

Setting up the audio manipulation chain:

The Web Audio API works based on a chain model. We need to connect the oscillator to the gain and the gain to the default destination of the audio processing (normally speaker or earphone).

oscillator.connect(gain);

gain.connect(ctx.destination);-

Adjusting parameters of Oscillator and Gain:

Adjusting parameters of Oscillator and Gain:

We can adjust the parapeters for Oscillator and Gain. The default values are in the following codes and thus can be omitted.

oscillator.type = "sine";

oscillator.frequency.value = 440;

gain.gain.value = 1;-

Start the Oscillator:

Start the Oscillator:

The Oscillator must be started to generate sound![]() .

.

oscillator.start();-

More controls can be added:

More controls can be added:

We can add more stuff by using the movement of the mouse to control the frequency and the gain value of the generated sound.

document.onmousemove = function(e) {

oscillator.frequency.value = e.clientX/window.innerWidth*1000;

gain.gain.value = e.clientY/window.innerHeight*2;

};As show above, the Web Audio API is already powerful enough for you to develope your own web based audio studio![]() .

.

Some of the Web Audio libraries and frameworks for references: